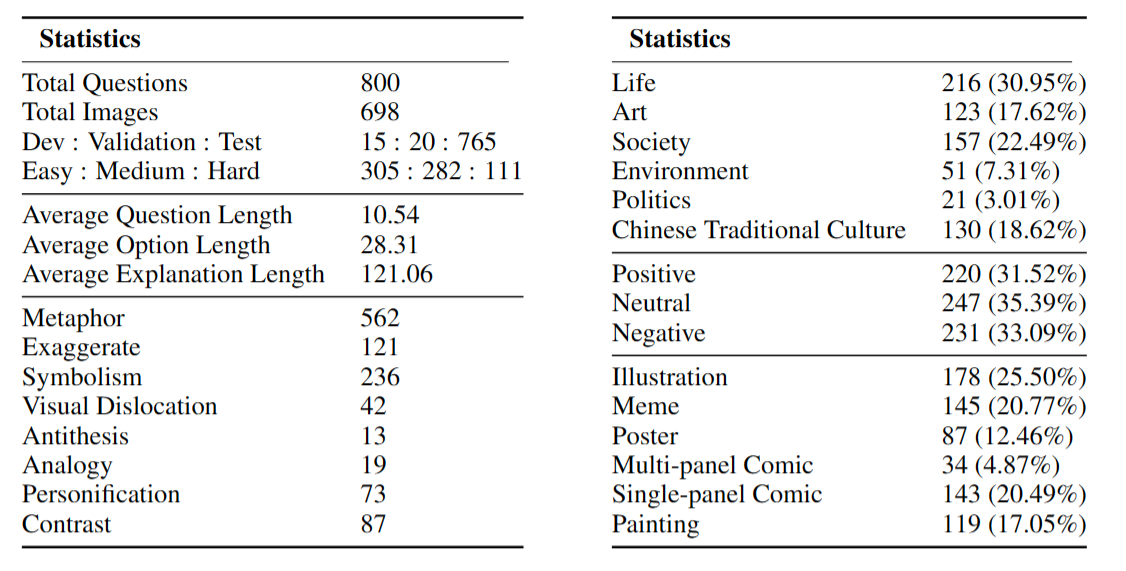

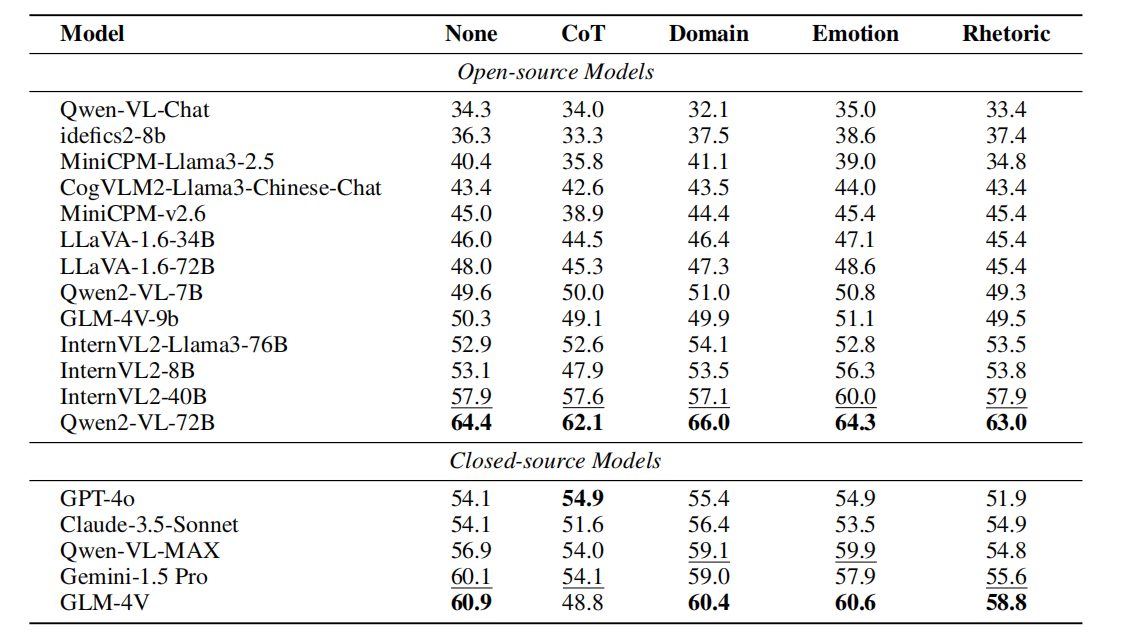

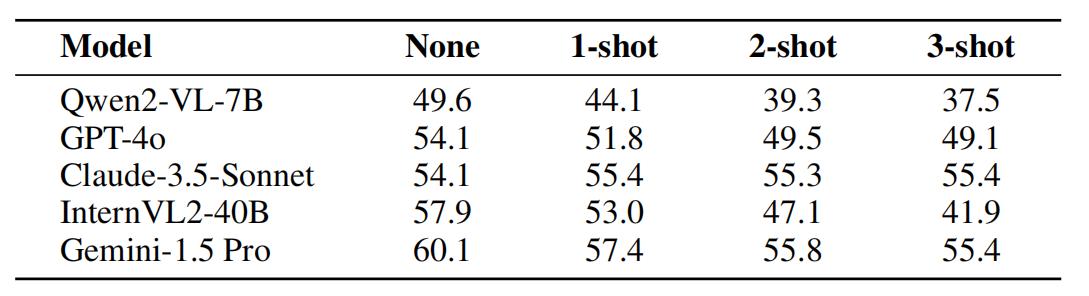

We conduct systematic experiments on both open-source and closed-source MLLMs using CII-Bench. For each model, we employ eight different configurations: None (zero-shot), 1-shot, 2-shot, 3-shot, CoT, Domain, Emotion, and Rhetoric. “None” represents the use of a standard prompt without any additional information. “Emotion” indicates the inclusion of information related to the emotional polarity of the image (e.g., positive, negative) in the prompt, “Domain” involves adding information about the image’s domain (e.g., life, art), and “Rhetoric” refers to including details about the rhetorical devices used in the image (e.g., metaphor, contrast) in the prompt. Additionally, to verify the necessity of images in problem-solving, we select a portion of LLMs to complete tasks without image input.

| Model | Overall | Life | Art | Society | Politics | Environment | Chinese Traditional Culture | Positive | Negative | Neutral |

| GLM-4V | 60.9 | 55.0 | 59.9 | 66.5 | 66.7 | 79.3 | 55.5 | 58.5 | 64.5 | 59.4 |

| Gemini-1.5 Pro | 60.1 | 60.0 | 63.3 | 62.4 | 70.8 | 62.1 | 51.1 | 54.8 | 65.6 | 59.4 |

| Qwen-VL-MAX | 56.9 | 53.3 | 59.2 | 58.8 | 62.5 | 67.2 | 52.6 | 53.9 | 58.3 | 58.0 |

| Claude-3.5-Sonnet | 54.1 | 52.1 | 61.9 | 52.6 | 62.5 | 46.6 | 53.3 | 52.7 | 56.5 | 53.0 |

| GPT-4o | 54.1 | 54.1 | 55.8 | 52.1 | 50.0 | 63.8 | 51.8 | 51.9 | 56.2 | 54.1 |

| Qwen2-VL-72B | 64.4 | 61.7 | 61.2 | 68.0 | 79.2 | 75.9 | 59.9 | 62.7 | 63.8 | 66.4 |

| InternVL2-40B | 57.9 | 55.8 | 55.1 | 61.9 | 62.5 | 70.7 | 52.6 | 54.4 | 58.0 | 60.8 |

| InternVL2-8B | 53.1 | 49.2 | 53.1 | 55.7 | 62.5 | 63.8 | 50.4 | 50.6 | 53.3 | 55.1 |

| InternVL2-Llama3-76B | 52.9 | 50.8 | 53.7 | 51.0 | 58.3 | 67.2 | 51.1 | 54.8 | 51.8 | 52.3 |

| GLM-4V-9b | 50.3 | 46.7 | 48.3 | 53.6 | 54.2 | 62.1 | 48.2 | 51.9 | 52.9 | 46.3 |

| Qwen2-VL-7B | 49.6 | 42.5 | 51.7 | 54.1 | 62.5 | 65.5 | 44.5 | 50.2 | 47.5 | 51.2 |

| LLaVA-1.6-72B | 48.0 | 43.8 | 48.3 | 49.5 | 70.8 | 60.3 | 43.8 | 41.5 | 52.5 | 49.2 |

| LLaVA-1.6-34B | 46.0 | 40.8 | 55.1 | 42.8 | 45.8 | 62.1 | 43.1 | 44.4 | 48.2 | 45.2 |

| MiniCPM-v2.6 | 45.0 | 37.5 | 47.6 | 49.5 | 58.3 | 55.2 | 42.3 | 45.6 | 44.6 | 44.9 |

| CogVLM2-Llama3-Chinese-Chat | 43.4 | 37.1 | 48.3 | 42.3 | 54.2 | 63.8 | 40.2 | 40.3 | 45.7 | 43.8 |

| MiniCPM-Llama3-2.5 | 40.4 | 36.3 | 45.6 | 37.1 | 50.0 | 51.7 | 40.2 | 43.2 | 37.0 | 41.3 |

| idefics2-8b | 36.3 | 25.0 | 46.3 | 38.1 | 41.7 | 56.9 | 32.9 | 32.8 | 39.1 | 36.4 |

| Qwen-VL-Chat | 34.3 | 27.9 | 34.7 | 32.5 | 45.8 | 55.2 | 36.5 | 34.0 | 35.1 | 33.6 |

| Qwen2-7B-Instruct | 32.5 | 33.2 | 34.6 | 30.9 | 35.0 | 40.7 | 28.5 | 33.6 | 30.4 | 33.6 |

| DeepSeek-67B-Chat | 27.1 | 26.6 | 32.7 | 30.9 | 20.0 | 35.2 | 18.2 | 25.7 | 22.2 | 33.2 |

| Llama-3-8B-Instruct | 21.7 | 22.2 | 26.9 | 18.6 | 25.0 | 27.8 | 20.4 | 21.2 | 24.4 | 19.5 |

| Human_avg | 78.2 | 81.0 | 67.7 | 82.7 | 87.7 | 84.0 | 65.9 | 77.9 | 75.2 | 81.6 |

| Human_best | 81.0 | 83.2 | 73.6 | 87.2 | 89.5 | 86.0 | 66.7 | 78.2 | 78.8 | 83.3 |

Overall results of different MLLMs, LLMs and humans on different domains and emotions. The best-performing model in each category is in-bold, and the second best is underlined.